Non-political news of early Feb 2019

I find myself wanting to share a little of the interesting direction I have been exploring; I’m teaching myself to build neural network models (which are the most common form of models behind artificial intelligence, or AI), and it’s been a wild journey. This intro is long, so skip down if you wish.

AI and brains

AI models recognize patterns; you can build a neural network model to recognize pretty much any pattern that you can imagine. You can teach a computer to find cancer cells in blood smears, to recognize a baby crying, to recognize a stop sign, to recognize which phrases in one language correspond to which phrases in another, and so on. The field of AI is on fire and neural networks are already used in thousands of products that you use daily, from weather to traffic reports to advertising, etc. The growth has been made possible by the huge increase in the availability of digital data, a concomitant increase in computing power (especially, improvement in GPUs), and a series of modeling discoveries with practical implications. There is no actual “intelligence” involved in AI models, at least as a human would conceive of it, because there is no reasoning going on, but as a researcher at Google recently commented, if you paste together enough pattern-recognizing models, they will be so useful that we may not care very much about the gaps that remain.

A mathematical model is like a machine you build with the purpose of recreating some pattern that you see in the world. Since the world is complicated, the challenge is to build the simplest machine that can recreate the pattern. If you feed the machine some relevant data and its output looks like the real thing, then you conclude that you have figured out how the system works (sometimes you’re wrong, but estimating how confident you can be in your results is a long, tedious story).

Models typically have anywhere from 1 to a 10 (or in extreme cases, a few hundred) knobs to adjust, which are called parameters. A number of things make neural network models very different from other modeling; the main difference is that you are training millions of parameters all at the same time; so many that it’s difficult to know exactly what the model is doing. A neural net model is like a machine with a million knobs. Some combination of settings on those knobs is guaranteed to produce a pattern that looks exactly like the real thing; it will appear that the machine has learned the important characteristics of the system. However, you are never 100% sure what it has learned. There are lots of examples of AI getting things fabulously wrong: for example, some hackers showed that by adding a few small black rectangles to a large, red, octagonal, totally obvious stop sign, the AI in a self-driving car would fail to identify it. Why? Because the AI model had learned to identify something else about the pictures that it was trained on. It’s difficult to figure out in reverse what a model learned (although that is an area of intense research activity), so the solution usually employed is brute force: just give the model more, and more varied, training data. There’s also an ongoing fascination with how the very simple neural network models differ from biological brains, which have many more neurons, of more types, with far more complicated networks (thousands more connections per neuron, a mind-boggling array of feedback loops, and so on).

There’s been tremendous progress in brain science as well, although brain science is still in its infancy. Consider that the human brain has approximately 100 billion neurons, with 100 trillion connections between them. Those connections matter immensely; neurons deliver information over long distances to specific parts of the brain (for example, from your eye to your optic lobes); those parts process the information in different ways, and pass on other information to other parts, always enhancing, altering, or inhibiting it. If the wrong connections were made, the brain wouldn’t work properly; we might not be able to see, or understand visual cues, or walk, or reason, or any of a million other problems. Yet if you consider that our DNA—our instruction manual—has approximately 3 billion base pairs, each of which represents two bits of information, then you can quickly see that there’s not even 1000th enough information in our DNA to specify how all of the connections between neurons should be made. That tells us immediately that the brain must somehow self-organize during development. Researchers are working furiously on that fascinating puzzle: how do our genes create a working system with all of its myriad connections, starting from a very large number of neurons that are not connected, or only connected locally in the embryo, using only simple rules without a complete instruction manual?

With that rather lengthy intro, this is a wonderful interview about developing brains, which turn out to generate a lot of spontaneous noise. We don’t know why.

http://nautil.us/issue/68/context/why-the-brain-is-so-noisy

Mad cow disease

Mad-cow disease is spreading in cervids (deer, elk, and moose) in the US. The incubation period in humans is years or decades. Although the risk is low, that does it for me—no venison! No need to read the article, really.

https://www.acsh.org/news/2018/01/10/cwd-mad-cow-disease-deer-threat-humans-12395

Things I hate to admit

I found a Yogi tea bag quote that I actually half like (damn!): “If you allow yourself to be successful, you shall be successful.” It’s a great reminder that most of our problems are self-created. Since I read it, I’ve repeatedly caught myself preparing to fail on something (getting work done on time, learning something I want to learn, talking to someone I want to talk to, etc.), and then I think “Perhaps I should just allow myself to succeed!” It’s a hopeful thought.

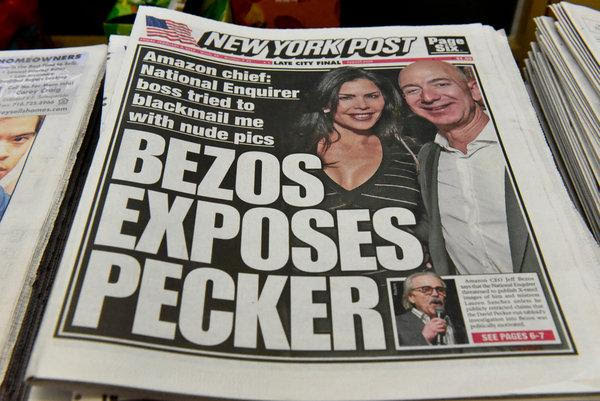

Headline of the decade

In case you hadn’t heard about it, this is about a consequential battle between the head of Amazon, Jeff Bezos, who owns the Washington Post, and David Pecker, who runs the National Enquirer. The National Enquirer revealed that Bezos had been cheating on his wife and having an affair. The political implications arise from the fact that the Washington Post has been extremely critical of Trump, and David Pecker and the National Enquirer have been big Trump supporters. Therefore it seems likely that Trump was using the National Enquirer and Pecker in a proxy war to take revenge on Bezos (don't worry, we will get to the fun part).

After the initial salvo, Bezos suggested publicly that the Enquirer’s motive for revealing his affair was political. The National Enquirer responded by threatening (secretly) to publish more and juicier photographs and love letters unless Bezos publicly recanted that suggestion. Instead, Bezos came out publicly and revealed their blackmail, making him a real hero for the moment (there’s also a question of whether a government agency was involved in procuring the letters, which would be a truly gigantic scandal).

Anyway, it was a once-in-a-lifetime alignment of the journalistic planets. I can just see the journalist who thought up the headline. A heavenly chorus of angels suddenly sustains one beautiful, deafening chord, her breath seems to have stopped, her skin is tingling, is she levitating? And Oh my God, the computer monitor is pulsing with a soft, golden glow! And then she snaps into action, kicking back her chair and sprinting (arms pumping, quads flexing, gaze fixed on the editor’s door), through the super-slow-motion chaos of falling coffee cups, cascading paper, and startled colleagues diving out of her way, her throat rippling as she screams “Beeeeeeeeeeeeeezooooooos Expoooooooooooses Peeeeeeecker!!!!”). What a moment, what a day, what a year, what a headline!

Usain Bolt

Usain Bolt beats all football players in history (ties the record, to be exact), hardly trying in sweats and sneakers. And it's no small feat: a lot of football players are top-ranked sprinters. I love this because it gives perspective on how fast he really is.

https://bleacherreport.com/articles/2818947-watch-usain-bolt-tie-nfl-combine-40-yard-dash-record-at-422-seconds

A major conservation success

Lastly, despite the incredibly polarized political climate we are in, the Senate just passed one of the biggest conservation bills in a long time. To me, this is evidence of what Ann has been teaching me: that the conservation movement is almost unrecognizable these days, compared to what it used to be. Despite the photos of charismatic megafauna like starving polar bear photos and sad tiger cubs that are still hawked by conservation groups, there’s a much more mature, powerful, organized movement than ever before, which works by building coalitions one player at a time from the ground up, and involving every interest group that you could imagine. This article describes just such a years-long effort paying off. Go, conservationists, go!

https://www.washingtonpost.com/climate-environment/2019/02/12/senate-just-passed-decades-biggest-public-lands-package-heres-whats-it/